We are well aware that analyzing large binaries in Binary Ninja right now can use a significant amount of memory. So, as we develop what will become our next release, 3.1, we are focusing on improving performance across the board. As a preliminary step, all Binary Ninja development builds starting from 3.0.3306-dev now include some of these memory usage and performance optimizations.

If you would like to check out these changes and help us test them, you can change your update channel in Preferences -> Update Channel… within Binary Ninja. Just set it to the “Binary Ninja development build” channel, select a version greater than or equal to 3.0.3306-dev, and click “Done”. Once Binary Ninja has downloaded the new version, click the green arrow in the bottom-left corner and Binary Ninja will restart and apply the new update.

We tested these optimizations on some of the largest binaries we can find: modern browsers. First up, Google Chrome.

Linux Chrome with Full Symbols

This is a build of Linux Chrome will all symbols left in the binary. This binary is a gigantic 1.37GB in size. In previous versions of Binary Ninja, this would be nearly impossible to analyze on any normal development workstation and would likely require server-class hardware to analyze. We are happy to report that a typical high-end development workstation can now analyze this binary with relative ease across all the platforms we support.

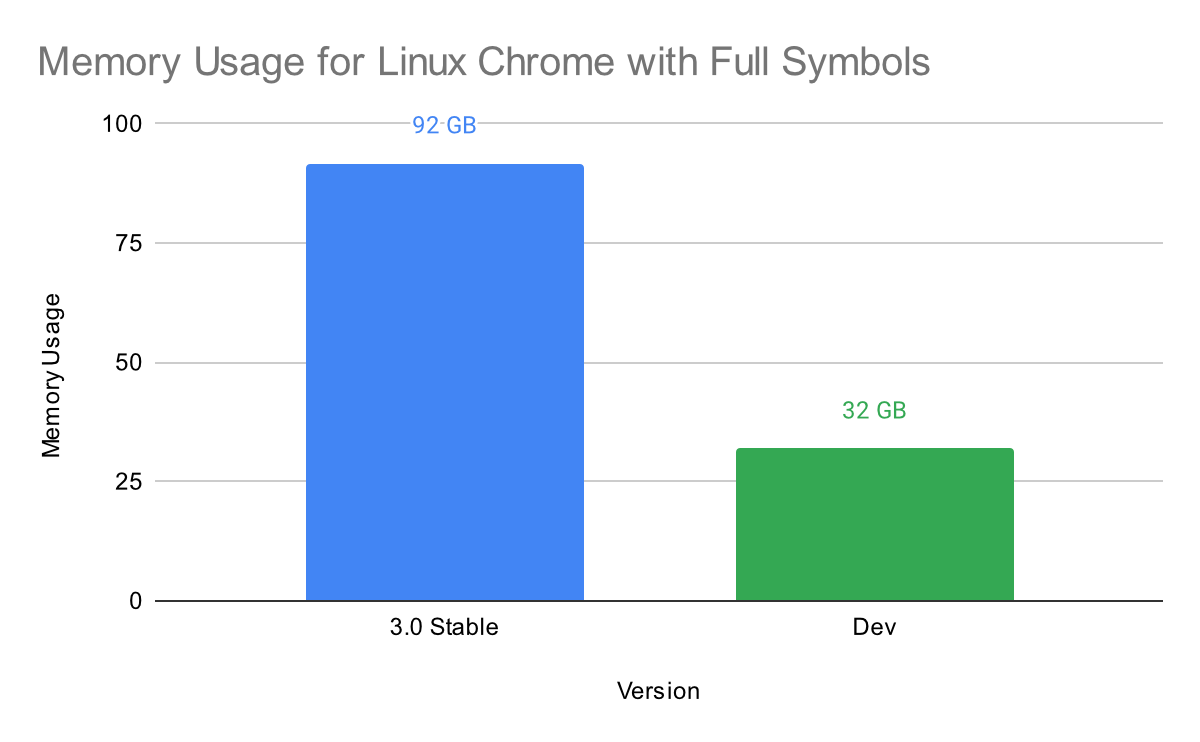

Below is the maximum memory usage after initial analysis of Chrome:

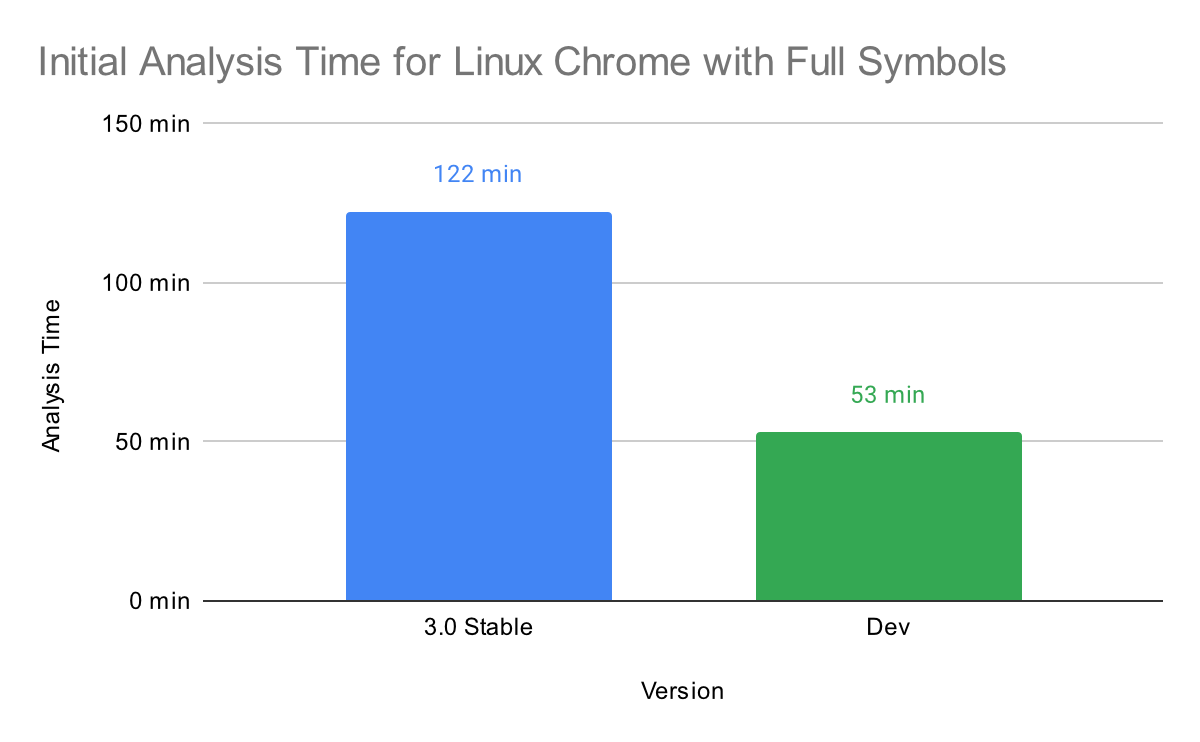

Initial analysis time was also greatly improved, as seen below:

These tests were performed on a large Linux server. How do the optimizations stack up across the other platforms we support? We will now look at a smaller binary, XUL from Firefox, and see the improvements on Windows and macOS.

Firefox XUL across All Platforms

This binary is the x86-64 macOS version of the core functionality of Mozilla Firefox. It weighs in at a large 129.9MB and would also cause a very large amount of memory usage in previous versions of Binary Ninja.

Tests for Linux and Windows were performed on an AMD Ryzen Threadripper 3970x with 64GB of RAM. Tests for macOS were performed on an Apple M1 Max with 64GB of RAM.

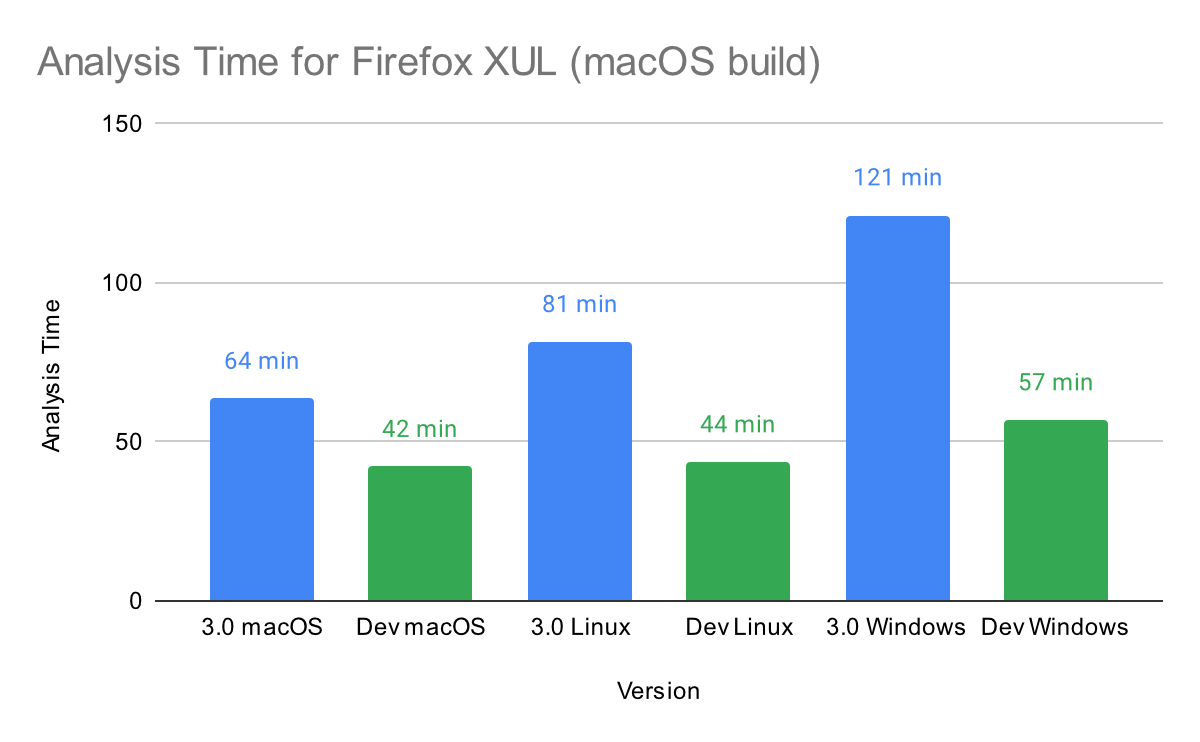

First, we will look at the initial analysis time improvements for all three of our supported platforms:

Analysis time has improved across the board, but Windows support has especially improved. We are much closer to performance parity across platforms, and are investigating further improvements. From our testing, the remaining gap in Windows performance is likely due to Windows having significantly more expensive lock primitives. Because lock contention in Binary Ninja also impacts our ability to scale to large numbers of cores, we will be investigating this further in future versions.

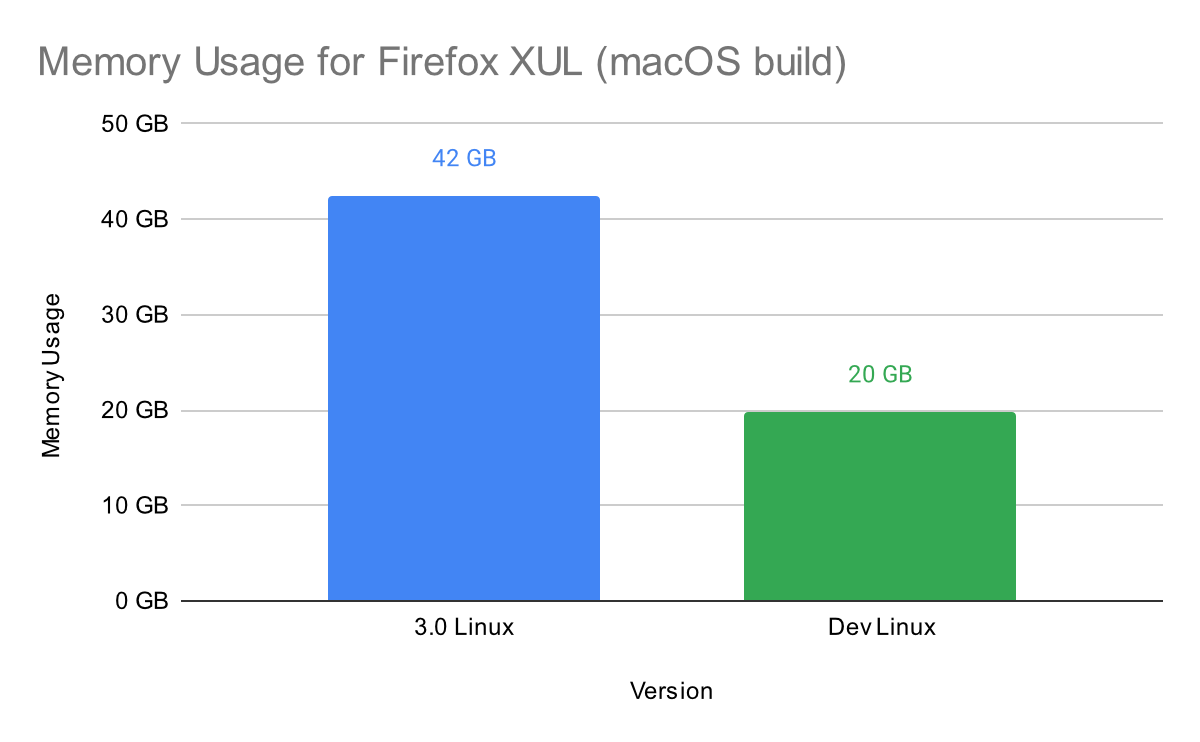

Memory usage also significantly improved for this binary, as shown below:

Future Optimizations

Our work on memory usage and performance is not complete for the 3.1 release. We will also be investigating performance during database saving and restoring, which can take a very long time on large binaries such as these. There have been small improvements in the current development build to improve this, but there will be a focus for the remainder of the 3.1 release to get a much smoother experience when working with large databases.

If you’re testing out these changes in our development builds, let us know what you think in Slack or on Twitter. And, if you encounter any issues, please submit an issue on GitHub so we can get it fixed prior to the final 3.1 release.